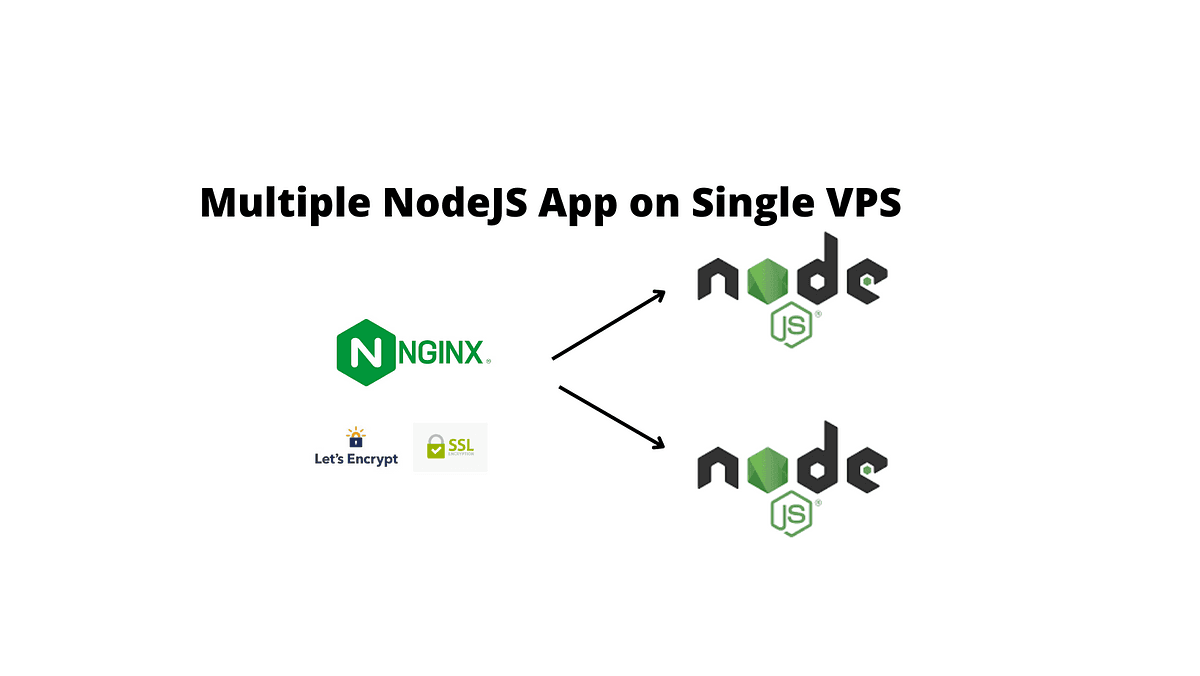

Deep Dive How Nginx + Lets Encrypt + Node.js works

Deep Dive How Nginx, Lets Encrypt, Node.js works. Understanding how nginx proxies req to other. How multiple apps manages.

When you setup you node.js backned running at localhost port 5000 which also has swagger documentation with nginx and encrypt it for SSL to make secure connection and use https and http both for your site than you will see below code in you nginx config file.

server {

server_name subdomain.example.com; # replace with your domain

location / {

proxy_pass http://127.0.0.1:5000;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

listen 443 ssl; # managed by Certbot

ssl_certificate /etc/letsencrypt/live/subdomain.example.com/fullchain.pem; # managed by Certbot

ssl_certificate_key /etc/letsencrypt/live/subdomain.example.com/privkey.pem; # managed by Certbot

include /etc/letsencrypt/options-ssl-nginx.conf; # managed by Certbot

ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem; # managed by Certbot

# Swagger UI

location /api-docs/ {

proxy_pass http://127.0.0.1:5000/api-docs/;

proxy_set_header Host $host;

}

}

server {

if ($host = subdomain.example.com) {

return 301 https://$host$request_uri;

} # managed by Certbot

listen 80;

server_name subdomain.example.com;

return 404; # managed by Certbot

}

Now below I will explain how all this works step by step.

1. First server block (HTTPS + proxy to Flask on port 5000)

nginx

server {

server_name subdomain.example.com; # replace with your domain

location / {

proxy_pass http://127.0.0.1:5000;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

listen 443 ssl; # managed by Certbot

ssl_certificate /etc/letsencrypt/live/subdomain.example.com/fullchain.pem; # managed by Certbot

ssl_certificate_key /etc/letsencrypt/live/subdomain.example.com/privkey.pem; # managed by Certbot

include /etc/letsencrypt/options-ssl-nginx.conf; # managed by Certbot

ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem; # managed by Certbot

# Swagger UI

location /api-docs/ {

proxy_pass http://127.0.0.1:5000/api-docs/;

proxy_set_header Host $host;

}

}Let’s unpack each line/group:

server { … }

This opens a “server block,” which tells Nginx: “Everything inside here applies when a request matches this block’s criteria (e.g., domain name, port).”server_name subdomain.example.com;What it does: Nginx checks the

Hostheader of incoming HTTP/S requests. If the Host matchesblogsapi.example.com, it uses this block.Why it’s important: You can have many server blocks—each keyed to different domains or subdomains. Here, we declare that this block is for

subdomain.example.com. If you requestedsubdomain.example.com(not listed), Nginx would skip this block.

location / { … }Meaning: “For any URI path that starts with

/…” (i.e., all paths unless a more specificlocationmatches first).This block’s purpose: Act as a reverse-proxy: forward requests to a backend (your Flask app running on 127.0.0.1:5000).

Inside this

location /:proxy_pass http://127.0.0.1:5000;For every request that matches

/, send it tohttp://127.0.0.1:5000(Flask’s default). So, if someone doeshttps://subdomain.example.com, Nginx connects tohttp://127.0.0.1:5000/usersand relays data back.This is the core reverse-proxy directive.

proxy_http_version 1.1;Upgrades the HTTP version used when Nginx speaks to the backend. By default, Nginx uses HTTP/1.0. Switching to 1.1 is necessary if you need features like

Connection: upgrade(for WebSocket, etc.) or chunked transfer encoding.In practice, if your backend expects keep-alive or WebSocket support, you want

1.1.

proxy_set_header Upgrade $http_upgrade;Copies the client’s

Upgradeheader (if present) into the proxied request.Typical use: If the client is trying to “upgrade” to a WebSocket connection (

Upgrade: websocket), Nginx will forward that header to Flask (assuming your Flask app/WSGI supports websockets via something likeFlask-SocketIO).

proxy_set_header Connection 'upgrade';Forces the

Connectionheader to beupgradewhen talking to the backend. Combined withproxy_http_version 1.1, this makes sure WebSocket handshakes pass through.If you didn’t do this, the backend might see

Connection: close(default in HTTP/1.0), which blocks the WebSocket upgrade.

proxy_set_header Host $host;Sets the

Hostheader in the proxied request to the original$host(e.g.,subdomain.example.com).Why? Many backends need to know the original hostname (for generating URLs, virtual-host logic, or SSL certificate checks). If you didn’t set this, Nginx would send

Host: 127.0.0.1:5000to Flask, which might break URI generation or cause CORS issues.

proxy_cache_bypass $http_upgrade;Tells Nginx not to cache the response when the request has an

Upgradeheader (i.e., a WebSocket upgrade).In short: any time you’re upgrading the protocol, bypass any proxy cache and do a fresh proxied connection.

This is mostly relevant if you’ve configured a

proxy_cacheelsewhere; it’s a safety to avoid caching WebSocket traffic.

listen 443 ssl;Instructs Nginx to listen on port 443 (the standard HTTPS port) and speak TLS/SSL on that port.

When a client does

subdomain.example.com, it hits this block. If a client tries plainhttp(port 80), it won’t match here (we’ll see the second block handling port 80).

SSL certificate directives (all “managed by Certbot”):

ssl_certificate /etc/letsencrypt/live/subdomain.example.com/fullchain.pem;

Points to the public certificate chain file (Let’s Encrypt gave you this).ssl_certificate_key /etc/letsencrypt/live/subdomain.example.com/privkey.pem;

Your private key. NEVER share this with anyone.include /etc/letsencrypt/options-ssl-nginx.conf;

A pre-made snippet from Certbot that sets recommended SSL parameters (protocols, ciphers, etc.).ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem;

Specifies a strong Diffie-Hellman parameter file to enhance forward secrecy.Collectively, these 4 lines make TLS work securely.

# Swagger UIcommentJust a human-readable note that the following

locationis for serving your API docs.

location /api-docs/ { … }

Anotherlocationblock, but more specific: it only matches URLs that begin with/api-docs/.

Inside it:proxy_pass http://127.0.0.1:5000/api-docs/;

Any request likeGET /api-docs/index.htmlgets forwarded tohttp://127.0.0.1:5000/api-docs/index.html.proxy_set_header Host $host;

As before, ensures the backend seesHost: subdomain.example.com(useful if your Flask app cares about the Host header when serving Swagger UI).

Why have two

locationblocks?location /catches all paths by default.But if

/api-docs/has special handling (e.g., different headers, caching rules, or a differentproxy_passpath), you can override the genericlocation /by putting a more specificlocation /api-docs/above it.

2. Second server block (HTTP → HTTPS redirect & default 404)

nginxCopyEdit

server { if ($host = subdomain.example.com) { return 301 https://$host$request_uri; } # managed by Certbot listen 80; server_name subdomain.example.com; return 404; # managed by Certbot }

Line by line:

server { … }

Begins a second server block. This one is meant to catch plain HTTP (port 80) traffic.if ($host = subdomain.example.com) { return 301 https://$host$request_uri; }This is an “if” block that triggers only when the incoming

Hostheader exactly matchessubdomain.example.com.If true, Nginx sends a

301 Moved Permanentlyredirect to the HTTPS version of the same URI:pgsqlCopyEdit

https://subdomain.example.com/some/path?query=foo$request_uriis the full original path + query string (e.g.,/foo/bar?x=1).In plain English: “If someone comes in via HTTP for blogsapi.example.com, redirect them to the same URL but with HTTPS.”

listen 80;Tells Nginx to listen on port 80 (the default HTTP port).

Every request on port 80 goes into this server block—provided the

Hostheader matchesblogsapi.example.com(due toserver_namebelow).

server_name subdomain.example.com;Again, matches

Host: subdomain.example.com.If you get a request on port 80 for a different domain that isn’t listed in any other server block, Nginx falls back to the first server block defined for port 80 (or a “default” block), not necessarily this one.

return 404;If the

ifcondition didn’t trigger (e.g., maybe the Host header was something else), you just return a 404.In practice, Certbot generated this so that only exact matches get redirected; anything else gets a 404 instead of redirecting or accidentally serving something.

3. Why two server blocks (and could you have more)?

HTTP (port 80) vs HTTPS (port 443):

Browsers default to port 80 for

http://…and port 443 forhttps://….You almost always need one server block to listen on port 80 (plain HTTP) so you can catch users who type

http://…or click an old link, then redirect them to HTTPS.The second block listens on port 443 with SSL enabled, so that actual encrypted requests get handled properly.

Separation of concerns:

The first block (port 443 + SSL) contains your real “proxy to Flask” logic.

The second block (port 80) simply performs a redirect → “Oh, you wanted plain HTTP? Let me flip you over to HTTPS.” If the host is wrong, it just gives a 404.

Could you combine them?

Technically, you could write a single server block that listens on both 80 and 443 and then inside use

if ($scheme = “http”)→ redirect. But that makes the config harder to read and can introduce subtle bugs (especially around HTTPS certificate negotiation).Best practice is to keep them separate.

You can have three or more server blocks if you need:

One for

subdomain.example.com(HTTPS).One for

api.example.com(HTTPS).One for

www.example.com(maybe redirect to non-www).One for each port (80 vs 443) for each domain.

One for an IP-based catchall or a default vhost.

Each “server block” is just a listening socket + a set of matching rules (server_name, port). You can absolutely define N blocks (e.g.

server_name site1.com,server_name site2.com, etc.), and each block can have its ownlisten,ssl,locationdirectives, etc.

4. How Nginx routes requests to the appropriate backend when you have multiple front-ends/back-ends

listen PORT [ssl]; server_name NAME;Defines the “front door”: which IP:port +

Hostheader combos map into that block.If a request comes in on 10.0.0.1:443 with

Host: subdomain.example.com, Nginx finds the server block withlisten 443 ssl; server_name subdomain.example.com.

location /some/path/ { … }Once inside a server block, Nginx tries to match the URI against

locationblocks in order of specificity:Exact matches (

location = /exact { … })Prefix matches with

^~Regex matches (

location ~ \.php$ { … })The generic

location / { … }.

Only one

locationblock ends up handling the request. If you have multiple back-ends for different subpaths (e.g.,/api/,/static/,/admin/), you write separatelocationblocks for each:nginxCopyEdit

server { listen 443 ssl; server_name example.com; # Serve static files directly from disk location /static/ { root /var/www/example.com; } # Proxy any /api/ calls to one backend location /api/ { proxy_pass http://127.0.0.1:5000; ... } # Proxy everything else to a different backend location / { proxy_pass http://127.0.0.1:8000; ... } }

proxy_pass http://UPSTREAM;orfastcgi_passoruwsgi_pass:These directives forward the request, after matching a

location, to an upstream service.You can define multiple

upstreamblocks at the top of your config so that you can load-balance among several app servers. E.g.:nginxCopyEdit

upstream flask_app { server 127.0.0.1:5000; server 127.0.0.1:5001; # server backend2.company.internal:5000; } server { listen 443 ssl; server_name example.com; location / { proxy_pass http://flask_app; # …other proxy headers… } }With that, Nginx will round-robin (by default) or use whatever LB method you configure to pick one of the backend addresses.

How to handle multiple “front-ends” (domains) pointing to different back-ends:

Simply create separate server blocks, each with its own

server_nameandlocation+proxy_pass.Example:

nginx# Block for subdomain.example.com → Flask API server { listen 443 ssl; server_name subdomain.example.com; location / { proxy_pass http://127.0.0.1:5000; } # …SSL certs for blogsapi… } # Block for blogsite.example.com → a Node.js app on port 4000 server { listen 443 ssl; server_name blogsite.example.com; location / { proxy_pass http://127.0.0.1:4000; } # …SSL certs for blogsite… } # Block for www.example.com → serve static HTML from /var/www/html server { listen 443 ssl; server_name www.example.com; root /var/www/html; index index.html; # …SSL certs for www.example.com… } Port separation vs domain separation vs path separation: Port separation: Two server blocks can both use server_name example.com if one listens on port 80 and the other on 443. (That’s exactly what Certbot scaffolds for you.) Domain separation: Two blocks can listen on the same port (e.g., both on 443) but have different server_name values. Nginx picks the matching block based on the Host header. Path separation: Within a single server block, you can dispatch different URIs to different back-ends (using location). In effect, by combining these three axes, you can have Nginx handle arbitrarily complex routing, e.g.: https://api.site.com/v1/… → 127.0.0.1:5000 https://api.site.com/v2/… → 127.0.0.1:6000 https://www.site.com/ → static HTML http://blog.site.com/ → redirect to https://blog.site.com/

Port separation vs domain separation vs path separation:

Port separation: Two server blocks can both use

server_name example.comif one listens on port 80 and the other on 443. (That’s exactly what Certbot scaffolds for you.)Domain separation: Two blocks can listen on the same port (e.g., both on 443) but have different

server_namevalues. Nginx picks the matching block based on theHostheader.Path separation: Within a single server block, you can dispatch different URIs to different back-ends (using

location).In effect, by combining these three axes, you can have Nginx handle arbitrarily complex routing, e.g.:

https://api.site.com/v1/…→127.0.0.1:5000https://api.site.com/v2/…→127.0.0.1:6000https://www.site.com/→ static HTMLhttp://blog.site.com/→ redirect tohttps://blog.site.com/

5. In-depth explanation of “why two servers” and “is one useless?”

Not useless—each serves a purpose:

Block 1 (port 443 + SSL):

Handles all HTTPS traffic.

Terminates TLS (reads the client’s encrypted data, decrypts it using your

ssl_certificate_key).Then proxies that request to your backend.

Block 2 (port 80):

Handles all plain HTTP traffic.

No TLS. If someone types

http://…, Nginx cannot answer securely, so we just301them to HTTPS.If anybody hits

http://for the wrong Host, wereturn 404(instead of accidentally falling back to another vhost).

If you removed the second block, port 80 requests would not be redirected. Worse, Nginx might use some default server block (maybe serving an empty page or your distro’s default) and your site wouldn’t be reachable on HTTP.

Could you combine them?

You might see configs like:

nginxCopyEdit

server { listen 80; listen 443 ssl; server_name example.com; if ($scheme = http) { return 301 https://$host$request_uri; } # SSL cert directives… ssl_certificate …; ssl_certificate_key …; # proxy_pass to backend… }This technically “works,” but it has drawbacks:

The

ifdirective inside a server block is considered risky (it’s an Nginx anti-pattern if not used carefully).You force every request (even HTTPS handshake attempts) into an

ifcheck.You lose the clean separation of “here’s my port 80 logic” vs “here’s my port 443 logic.”

For maintainability and clarity, splitting into two blocks is the strongly recommended approach.

Could you define “three” or more server blocks?

Absolutely. For example:Server A:

listen 80; server_name subdomain.example.com; return 301 https://…Server B:

listen 80; server_name blogsite.example.com; return 301 https://…Server C:

listen 443 ssl; server_name blogsapi.example.com;→ proxy to Flask on 5000Server D:

listen 443 ssl; server_name blogsite.example.com;→ proxy to Node.js on 4000Server E: Catchall for any other hostnames on 80 or 443 → return 404

And so on. Each block isolates:Which domain(s) it responds to (

server_name)Which port(s) it listens on (

listen)What it does with the request (

return,root,proxy_pass, etc.)

6. “How Nginx works” when you have multiple front-ends/back-ends

Step 1: Incoming TCP connection → Nginx “listen”

A client (browser, curl, Postman) does DNS lookup for

blogsapi.example.com→ gets your server’s IP.It opens a TCP connection to port 80 or 443 on that IP.

Nginx has sockets already “listening” on those ports. The OS hands the connection to Nginx.

Step 2: Nginx picks a server block

First, Nginx sees which

listenline matches (port 80 vs 443).Among all server blocks listening on that port, it tries to match

server_name.If there’s an exact match (

blogsapi.example.com), it picks that block.If there are wildcards or regex server_names, it follows its priority rules.

If nothing matches, it falls back to the first server block defined for that port—called the “default server.”

Step 3: If port 443, TLS handshake

Because you said

listen 443 ssl;, Nginx immediately begins the TLS handshake:Sends its certificate (

fullchain.pem) to the client.Proves it holds the matching private key (

privkey.pem).Negotiates ciphers, etc.

Once the handshake finishes, we have an encrypted channel. Nginx decrypts any data the client sends and continues.

Step 4: URI arrives → Nginx picks a

locationSuppose the client did

GET /api-docs/index.html.Nginx tries to find a best

locationmatch:Exact literal match (

location = /api-docs/index.html)? None.Prefix with

^~? None.Regex matches? None configured.

Longest prefix match: there is

location /api-docs/(prefix length = 10) andlocation /(prefix length = 1). The longer one wins → so/api-docs/block is chosen.

If the request had been

/users, onlylocation /matches.

Step 5: Forward or serve content

In the chosen

locationblock, Nginx checks directives.For

/api-docs/, we seeproxy_pass http://127.0.0.1:5000/api-docs/;. So Nginx:Opens a new HTTP/1.1 connection from itself (the “worker process”) to

127.0.0.1:5000.It rewrites the request path (here it’s the same:

/api-docs/...).It also injects the

Host: blogsapi.example.comheader.If the client included

Upgrade: websocket, Nginx passes that along too.

It waits for the backend to respond, then streams the backend’s response back to the client over the TLS channel (encrypting on the fly).

7. Putting it all together: scaling to “multiple front-ends/back-ends”

Imagine you have:

Domain A → Node.js on port 3000

Domain B → Python/Flask on port 5000

Domain C → Static HTML from

/var/www/domainC

You’d write three HTTPS server blocks plus three HTTP→HTTPS redirects. Rough outline:

nginxCopyEdit

# ===== HTTP (port 80) blocks ===== server { listen 80; server_name domainA.com; return 301 https://$host$request_uri; } server { listen 80; server_name domainB.com; return 301 https://$host$request_uri; } server { listen 80; server_name domainC.com; return 301 https://$host$request_uri; } # ===== HTTPS (port 443) blocks ===== server { listen 443 ssl; server_name domainA.com; ssl_certificate /etc/letsencrypt/live/domainA.com/fullchain.pem; ssl_certificate_key /etc/letsencrypt/live/domainA.com/privkey.pem; include /etc/letsencrypt/options-ssl-nginx.conf; ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem; location / { proxy_pass http://127.0.0.1:3000; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection 'upgrade'; proxy_set_header Host $host; proxy_cache_bypass $http_upgrade; } } server { listen 443 ssl; server_name domainB.com; ssl_certificate /etc/letsencrypt/live/domainB.com/fullchain.pem; ssl_certificate_key /etc/letsencrypt/live/domainB.com/privkey.pem; include /etc/letsencrypt/options-ssl-nginx.conf; ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem; # Serve a REST API with two endpoints, maybe: location /api/ { proxy_pass http://127.0.0.1:5000/api/; proxy_set_header Host $host; # …other proxy headers… } location / { root /var/www/domainB.com/html; index index.html index.htm; } } server { listen 443 ssl; server_name domainC.com; ssl_certificate /etc/letsencrypt/live/domainC.com/fullchain.pem; ssl_certificate_key /etc/letsencrypt/live/domainC.com/privkey.pem; include /etc/letsencrypt/options-ssl-nginx.conf; ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem; root /var/www/domainC.com/static; index index.html; # Maybe you also add: location ~* \.(css|js|png|jpg|gif|ico)$ { expires 7d; add_header Cache-Control "public"; } }

Three HTTP blocks (port 80) to force redirect → HTTPS.

Three HTTPS blocks (port 443) to actually serve each domain’s content (either proxied or static).

You can also combine closely related subdomains in a single file using wildcards and/or regex in server_name. But conceptually, each domain/subdomain → its own “matching rules + backend” in its server block(s).

8. Key takeaways about “why two servers” and “routing logic”

Two servers = one for HTTP (redirect) + one for HTTPS (serve).

The HTTP block is not useless—it just points browsers to HTTPS. If you omit it, your site won’t automatically upgrade from

http://tohttps://.

Within the HTTPS server, you can have multiple

locationblocks to split/api/,/api-docs/,/static/, etc., each pointing to different upstreams or static roots.You can define “upstream” groups if you need load balancing:

nginxCopyEdit

upstream my_flask { server 127.0.0.1:5000; server 127.0.0.1:5001; } server { listen 443 ssl; server_name highload.example.com; location / { proxy_pass http://my_flask; # …same proxy headers… } }You can have as many server blocks as you like, each keyed to different

server_name/listencombinations.Common pattern:

One server block per domain on port 80 (redirect to HTTPS).

One server block per domain on port 443 (serve actual content).

Matching precedence:

Nginx picks the server block based on the incoming port and

Hostheader.Inside that block, it picks

locationbased on the request URI.Once

locationis chosen, the directives inside (e.g.,proxy_pass,root,fastcgi_pass) determine how to fetch/generate the response.

Final Notes

There’s nothing inherently “wrong” or “wasteful” about having two server blocks. It’s standard to handle HTTP→HTTPS separately from “serve the real site.”

If you want to add a third server block, simply copy/paste the template and change:

listen(port or protocol)server_name(new domain/subdomain)location/root/proxy_passto match whatever new backend you need.

Once you get comfortable, you can even chain more complex logic:

Use

mapto set variables based on$host, then pick different back-ends in one block.Use

geoto route traffic by client IP.Use

limit_req/limit_conninsidelocationto throttle certain endpoints.

But at its core, Nginx’s decision process is always:

Select server block based on

listen+server_name.Select location based on URI pattern matching.

Execute directives (static file, proxy, redirect, etc.).

Share this article

Admin User

Admin User

Related Posts

How to Set Up Redis in Node.js on Ubuntu 20.04/22.04/24.04 LTS

Learn how to install and configure Redis with Node.js on Ubuntu 20.04, 22.04, or 24.04 LTS. This step-by-step guide ensures high-performance caching and data storage for your Node.js apps.

The Enduring Importance of Data Structures & Algorithms

In an age where AI seems to handle everything from chatbots to complex simulations, the fundamentals of Data Structures & Algorithms (DSA) remain the cornerstone of every efficient, scalable, and innovative software solution. Here’s why, even in 2025, DSA skills are non-negotiable for any developer aiming to thrive.

Average Salaries in India (2025) for Developers & Designers Based on Experience

Planning a career in tech or design? This detailed guide breaks down the average salary in India for developers (Node.js, Java, Python, Django) and designers based on experience—from fresher to 4+ years.

Ubuntu 24.04 LTS Installation Guide for Any PC – From BIOS to Developer Setup

Want to install Ubuntu 24.04 LTS on your PC? This universal guide covers BIOS setup, swap memory recommendations, partitioning, and essential post-install commands for all systems.